CSIG图像图形技术国际在线研讨会第11-12期将于5月24-25日举办

今年,文生视频大模型SORA的惊艳亮相,在业界引发了广泛讨论和关注。然而,在惊叹于AIGC(Artificial Intelligence Generated Content)技术能够实现“以假乱真”效果的同时,我们也发现这些由人工智能生成的作品在细节之处存在着很多瑕疵,这主要是由于现有深度学习模型在内容生成过程中缺乏先验指导的边界条件。因此,我们可以预测AIGC技术的下一步发展将聚焦如何结合潜在语义、拓扑逻辑、物理原理等先验知识,以生成细节更加生动逼真的虚拟内容。

围绕这一主题,中国图象图形学学会(CSIG)举办CSIG图像图形技术国际在线研讨会第11-12期,特别以Conditioned Dynamic Modeling & Editing为专题,将于2024年5月24日(周五)15:30-17:30和5月25日(周六)9:30-10:30分两次举行。

会议邀请了来自英国、美国和中国的3位国际知名学者,他们将分享人工智能生成技术领域的最新研究成果,并围绕该领域当下挑战及未来趋势开展讨论。我们热切期待学术与工业界同行的积极参与,共同探讨AIGC技术未来的发展与应用!

主办单位:中国图象图形学学会(CSIG)

承办单位:CSIG国际合作与交流工作委员会、女科技工作者工作委员会

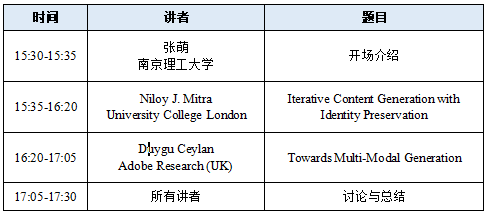

会议时间:2024年5月24日(周五)15:30-17:30 (Part 1)

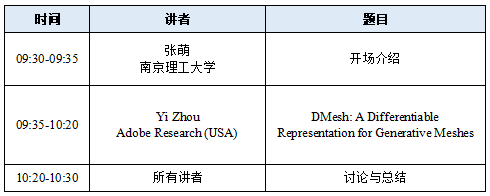

2024年5月25日(周六)09:30-10:30 (Part 2)

腾讯会议:182-324-517

会议直播:https://meeting.tencent.com/l/PTatQESfNEHf

或长按以下二维码进入

会议日程

Part 1 (2024/5/24, 周五)

Part 2 (2024/5/25, 周六)

讲者简介

Niloy J. Mitra

University College London

Adobe Research

Niloy J. Mitra leads the Smart Geometry Processing group in the Department of Computer Science at University College London and the Adobe Research London Lab. He received his Ph.D. from Stanford University under the guidance of Leonidas Guibas. His research focuses on developing machine learning frameworks for generative models for high-quality geometric and appearance content for CG applications. He was awarded the Eurographics Outstanding Technical Contributions Award in 2019, the British Computer Society Roger Needham Award in 2015, and the ACM SIGGRAPH Significant New Researcher Award in 2013. Furthermore, he was elected as a fellow of Eurographics in 2021 and served as the Technical Papers Chair for SIGGRAPH in 2022. His work has also earned him a place in the SIGGRAPH Academy in 2023. Besides research, Niloy is an active DIYer and loves reading, cricket, and cooking. For more, please visit https://geometry.cs.ucl.ac.uk.

Talk title: Iterative Content Generation with Identity Preservation

Abstract: Foundational models using language and image have emerged as successful generative models. In this talk, I will discuss how we can revisit some of the core geometry processing problems using language/image foundational models as priors. We will report on our attempts to mix classical representations and foundational models to come up with universal algorithms that do not require huge amounts of 3D training data and discuss open challenges ahead. I will discuss applications in shape correspondence, image generation, and image editing.

Duygu Ceylan

Adobe Research

Duygu Ceylan is senior research scientist and manager at Adobe Research. Prior to joining Adobe in 2014, Duygu obtained her PhD degree from EPFL where she worked with Prof. Mark Pauly. Duygu received the Eurographics PhD Award in 2015 and the Eurographics Young Researcher Award in 2020. She also won the Adobe Tech Excellence award in 2023. Her research interests include using machine learning techniques to infer and analyze 3D information from images and videos, focusing specifically on humans. She is excited to work at the intersection of computer vision and graphics where she explores new methods to bridge the gap between 2D & 3D.

Talk title: Towards Multi-Modal Generation

Abstract: We are witnessing an impressive pace of innovation happening in the generative AI space. 2D image domain is often at the frontier of this innovation followed by trends to extend the success to domains such as videos or 3D. While they seem as different domains, one can argue that these domains are in fact very much connected. In this talk, I will talk about some recent efforts that leverage the knowledge of foundational models trained for a particular domain to address tasks in other domains. I will also present thoughts around future opportunities that can leverage this tight connection to go towards universal generation models.

Yi Zhou

Adobe Research

Yi Zhou is a research scientist at Adobe. She received my PhD from the University of Southern California under the supervision of Dr. Hao Li and her Master's and Bachelor's degrees from Shanghai Jiao Tong University under the supervision of Dr. Shuangjiu Xiao. Her research focuses on 3D learning and 3D vision, especially on creating digital humans and autonomous virtual avatars. Her works mainly lie in 3D human modeling, reconstruction, motion synthesis, and simulation.

Talk title: DMesh: A Differentiable Representation for Generative Meshes

Abstract: In the field of computer graphics, mesh is a widely favored representation for modeling and rendering 3D characters and objects. Traditional mesh formulations, however, are not directly compatible with deep learning architectures due to their non-differentiable structural nature. Consequently, many researchers have turned to differentiable intermediaries, such as implicit functions or NeRF, subsequently converting these intermediaries back into mesh for practical applications, albeit at the cost of some inherent mesh properties. In contrast, a smaller group of researchers, myself included, have pursued an alternative route aiming to formulate the mesh itself differentiable. This talk will delve into the challenges we've faced and will introduce our latest innovation, DMesh, a differentiable version of mesh. We will discuss about how DMesh can revitalize mesh's utility in the evolving field of AI-driven computer graphics.

主持人简介

张萌

南京理工大学

Meng Zhang is an Associate Professor at Nanjing University of Science and Technology, China. Before that, she spent three years working as a Postdoc Researcher, at UCL, UK. She received her Ph.D. from department of computer science and technology, Zhejiang University. Her research interests are in computer graphics, with a recent focus on applying deep learning to dynamic modeling, rendering, and editing. Her work mainly lies in hair modeling and garment simulation and animation.

Copyright © 2025 中国图象图形学学会 京公网安备 11010802035643号 京ICP备12009057号-1

地址:北京市海淀区中关村东路95号 邮编:100190